Clever cluster

From PlcWiki

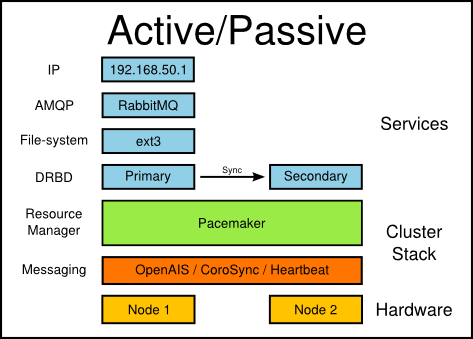

Clever Cluster is HA (high availability) cluster solution that ensures that a critical Clever server keeps running when a hardware failure occurs. The Clever server is a virtual machine that is able to be hosted on several cluster nodes. To achieve that goal, the virtual drive of the Clever server has to be stored on a shared resource.

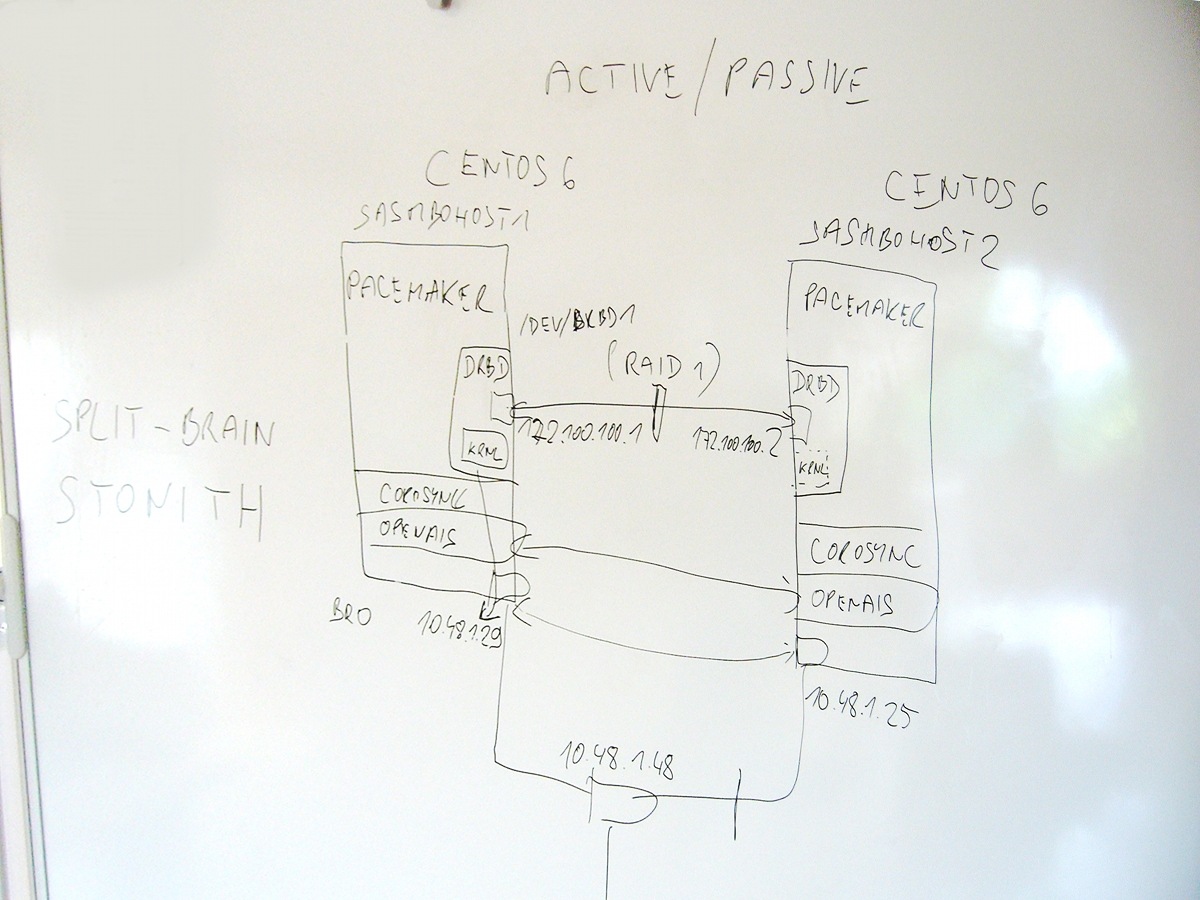

We utilize a technology called DRBD (Distributed Replicated Block Device) for that purpose. DRBD can be seen as a RAID1 over network. Only one node can be DRBD primary at a time - the other ones are secondary DRBD nodes. The DRBD block device can be mounted only in primary role. In the secondary role, it just synchronizes it's state with the primary DRBD node. To make the synchronization reliable, it's necessary to interconnect the nodes with a direct network link and keep the connection working at all costs. Therefore we recommend bonding two or more dedicated NICs for this single purpose.

When a hardware failure occurs on an active (primary) node, any currently inactive node can be promoted to primary role, the shared storage can be mounted and the virtual machine can be started on such node without much hassle. The new primary node should contain all the changes written to DRBD storage before failure as any modifications are transferred to secondary nodes almost immediately.

Such fail-over procedure is simple but would require human intervention. We also need to make sure that just one and only one node acts as a primary at a time. To automatize the whole process, we need an enterprise-level cluster stack.

The cluster stack of choice in our case is Pacemaker+Corosync(OpenAIS implementation) - a solution preferred by both Red Hat and Novell.

More about Pacemaker here: http://www.clusterlabs.org and here: http://www.slideshare.net/DanFrincu/pacemaker-drbd .

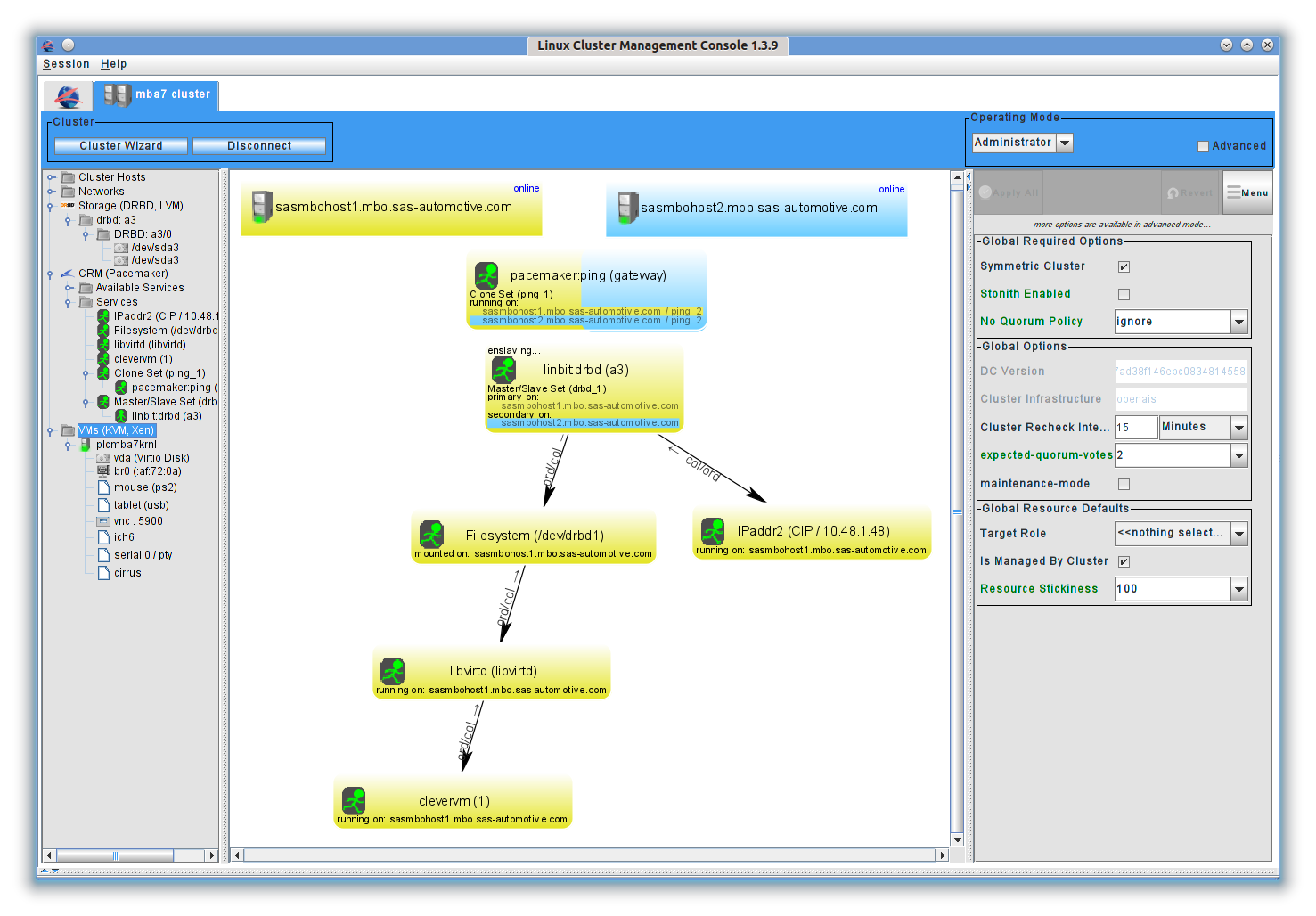

Pacemaker's job is to keep replicated DRBD storage mounted on one and only one active node and, as a dependency, to move the virtualized Clever server to such node.

At the same time it tests external connectivity on all nodes using pingd (sends ICMP packets to gateway) and automatically promotes to primary role a node that has not lost the connectivity.

As a result of defined dependencies, the virtualized server gets moved as well as the DRBD storage. The migration is done by hibernating on the original node first (in case of controlled migration) and by resuming on the target node then. The whole operation takes about 2 minutes or less, even e.g. an open SSH session survives this operation. Migration can be initiated manually: with a command or with a special application.

Another resource, that is automatically moved together with the primary DRBD storage is a special IP address denoting the active node.

It is recommended to install Linux Cluster Management Console for convenient cluster management: http://sourceforge.net/projects/lcmc/files/

Please work with the DRBD resource when migrating the resources manually, all the other services are configured as dependent on the DRBD resource.

It is also possible to control the cluster from console using service clevervm, that was created for that purpose (in most cases it doesn't matter which node it is issued on).

service clevervm status

Displays a verbose cluster status, example:

============

Last updated: Thu May 31 12:42:05 2012

Last change: Thu May 31 12:33:14 2012 via cibadmin on sasmbohost1.mbo.sas-automotive.com

Stack: openais

Current DC: sasmbohost1.mbo.sas-automotive.com - partition with quorum

Version: 1.1.6-3.el6-a02c0f19a00c1eb2527ad38f146ebc0834814558

2 Nodes configured, 2 expected votes

8 Resources configured.

============

Node sasmbohost2.mbo.sas-automotive.com: online

res_drbd_1:1 (ocf::linbit:drbd) Master

res_Filesystem_1 (ocf::heartbeat:Filesystem) Started

res_clevervm_1 (lsb:clevervm) Started

res_libvirtd_libvirtd (lsb:libvirtd) Started

res_ping_gateway:1 (ocf::pacemaker:ping) Started

res_IPaddr2_CIP (ocf::heartbeat:IPaddr2) Started

Node sasmbohost1.mbo.sas-automotive.com: online

res_ping_gateway:0 (ocf::pacemaker:ping) Started

res_drbd_1:0 (ocf::linbit:drbd) Slave

Inactive resources:

Migration summary:

* Node sasmbohost2.mbo.sas-automotive.com:

* Node sasmbohost1.mbo.sas-automotive.com:

====== DRBD storage =======

1:a3/0 Connected Primary/Secondary UpToDate/UpToDate A r----- /opt ext4 253G 107G 134G 45%

==== Virtual machines =====

---------------------------

Name: plcmba7krnl

State: running

Managed save: no

---------------------------

service clevervm start

Starts all Clever KVM virtual guests (guest names starting with plc). Beware that if the clevervm service is marked as stopped in Pacemaker, it would bring the resource into an inconsistent state and it would be necessary to fix the situation. It is not recommended to use this command, as well as starting the virtual guests with virsh.

service clevervm forcestart

Start all Clever KVM virtual guests indirectly with Pacemaker. Useful if the service was marked as stopped in Pacemaker before.

service clevervm stop

Stops (hibernates) all Clever KVM virtual guests. Beware that if the clevervm service is marked as started in Pacemaker, Pacemaker will start the guests again. It is not recommended to use this command, as well as hibernating/stopping the virtual guests with virsh.

service clevervm forcestop

Stops all Clever KVM virtual guests indirectly with Pacemaker and marks them as stopped.

service clevervm cleanup

Makes a clevervm cluster resource cleanup (executes crm resource cleanup res_clevervm_1) - cleans any failure records, hence enables to start the service again if the max. failure limit was reached.

service clevervm migrate

Forces Pacemaker to migrate the primary DRBD storage (and hence all the dependent services) out of this node (i.e. to the other node). The current node will be then marked as not eligible for running the services. Use service clevervm unmigrate to remove the constraint otherwise the current node won't be able to become primary again when fail-over is needed.

service clevervm unmigrate

Removes constrain "not eligible for running services" from this node. It does not mean the services will be migrated to this node, there is a certain level of stickiness to prevent frequent fluctuation.

Split-brain

Split-brain situations should be avoided at all costs. They happen when the connection between the nodes is damaged and two or more nodes start acting as primary. Should such situation appear, human intervention is vital to resolve the problem and prevent data loss.

More about DRBD split-brain recovery: http://www.drbd.org/users-guide/s-resolve-split-brain.html