Cluster MBA7 (English)

From PlcWiki

(Created page with 'It turned out that using KVM and DRBD in a cluster without a specialized control mechanism is possible, but a lot of common activities then become too complicated and their provi…')

Current revision as of 10:12, 29 April 2020

It turned out that using KVM and DRBD in a cluster without a specialized control mechanism is possible, but a lot of common activities then become too complicated and their provision would require a lot of additional work.

One example for all: starting the VM kernel when starting the host machine. Of course, it is only possible to boot the kernel on one node, so the kernel is not allowed to boot if one instance is already running on the other node, which requires communication between the two nodes.

Of course, all this can be implemented, but it is much better to use established and proven solutions.

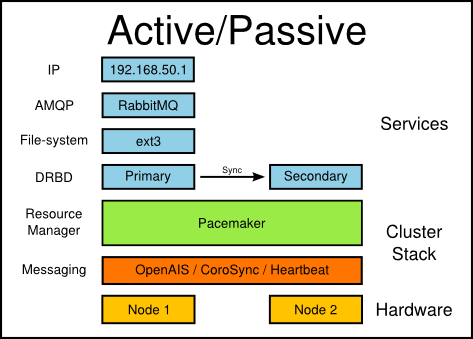

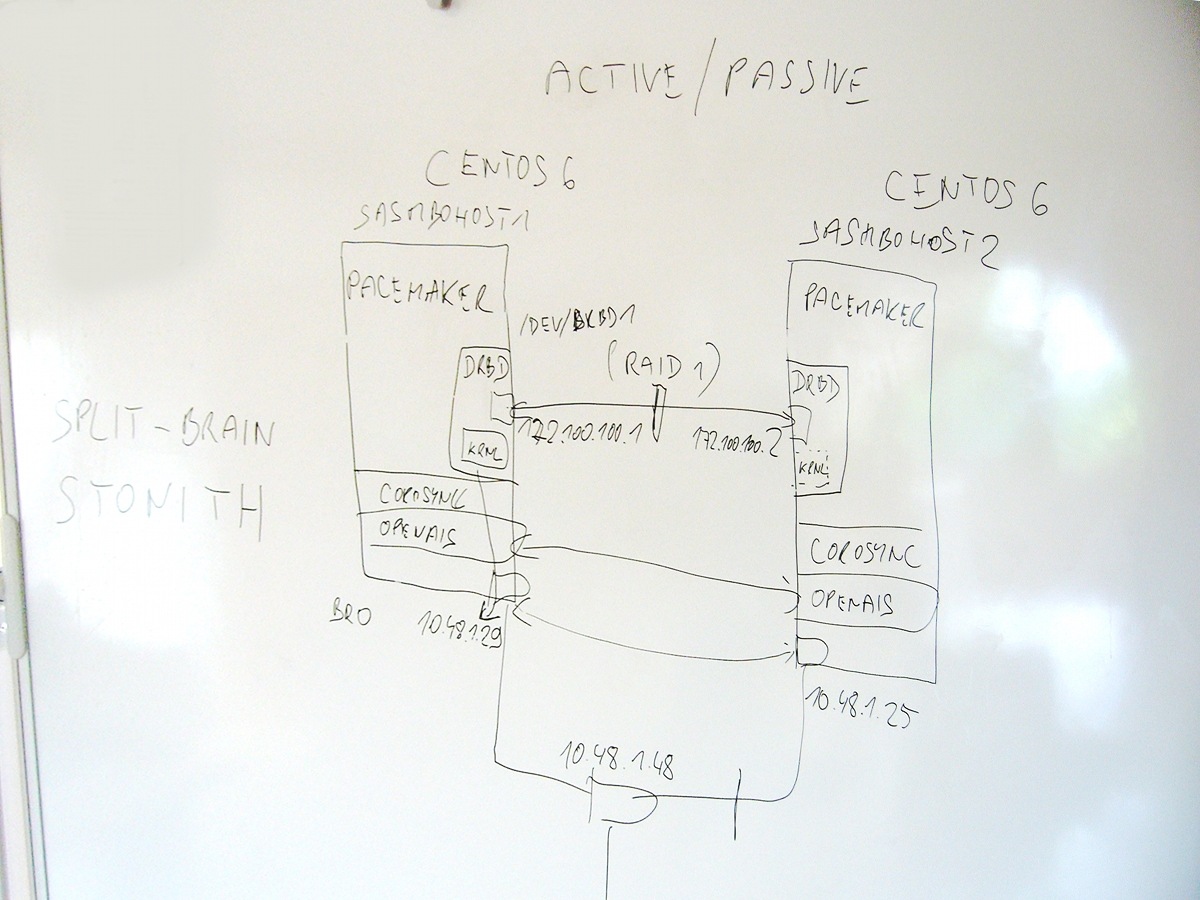

I chose Pacemaker + Corosync (OpenAIS) - a cluster stack preferred by both Redhat and Novell. More about Pacemaker at http://www.clusterlabs.org or http://www.slideshare.net/DanFrincu/pacemaker-drbd .

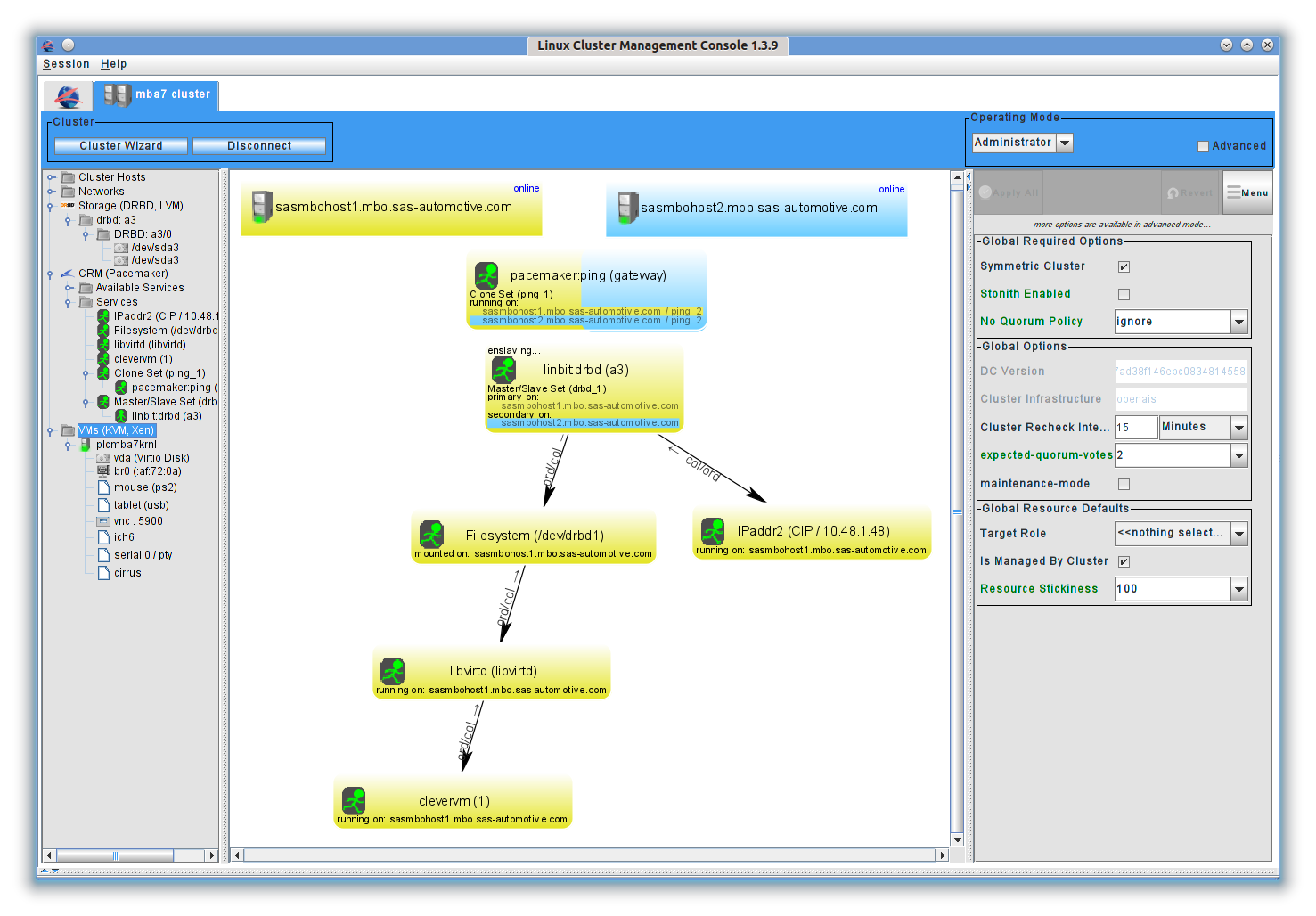

In our case, Pacemaker ensures that replicated DRBD storage is mounted on just one live node and, depending on that virtualized kernel is moved to that node. At the same time, it uses pingd (connectivity test against 10.48.1.240 and 10.48.1.3) to monitor connectivity on individual nodes and automatically moves the primary DRBD repository to a node that has not lost connectivity.

Along with the DRBD repository, the virtualized kernel is moved due to dependencies. The kernel is moved by hibernating the original node and resuming its operation on the target node. The whole operation usually takes place within two minutes (typically in 90 s), without, for example, interrupting open SSH sessions. Migration can also be invoked manually: either by a command or by a special application.

Another tool that automatically moves along with the primary DRBD storage is the IP address 10.48.1.48, which I allowed myself to use for this purpose (ie it is the designation of the node on which the kernel is currently running).

For convenient cluster control, it is good to install the Linux Cluster Management Console: http://sourceforge.net/projects/lcmc/files/

From the console, it is possible to control the cluster using the LSB service clevervm, which I created for this purpose (with some exceptions, it does not matter on which node the commands are entered):

service clevervm status

Displays the detailed status of the cluster, for example:

============

Last updated: Thu May 31 12:42:05 2012

Last change: Thu May 31 12:33:14 2012 via cibadmin on sasmbohost1.mbo.sas-automotive.com

Stack: openais

Current DC: sasmbohost1.mbo.sas-automotive.com - partition with quorum

Version: 1.1.6-3.el6-a02c0f19a00c1eb2527ad38f146ebc0834814558

2 Nodes configured, 2 expected votes

8 Resources configured.

============

Node sasmbohost2.mbo.sas-automotive.com: online

res_drbd_1:1 (ocf::linbit:drbd) Master

res_Filesystem_1 (ocf::heartbeat:Filesystem) Started

res_clevervm_1 (lsb:clevervm) Started

res_libvirtd_libvirtd (lsb:libvirtd) Started

res_ping_gateway:1 (ocf::pacemaker:ping) Started

res_IPaddr2_CIP (ocf::heartbeat:IPaddr2) Started

Node sasmbohost1.mbo.sas-automotive.com: online

res_ping_gateway:0 (ocf::pacemaker:ping) Started

res_drbd_1:0 (ocf::linbit:drbd) Slave

Inactive resources:

Migration summary:

* Node sasmbohost2.mbo.sas-automotive.com:

* Node sasmbohost1.mbo.sas-automotive.com:

====== DRBD storage =======

1:a3/0 Connected Primary/Secondary UpToDate/UpToDate A r----- /opt ext4 253G 107G 134G 45%

==== Virtual machines =====

---------------------------

Name: plcmba7krnl

State: running

Managed save: no

---------------------------

service clevervm start

Starts the virtual machine (s). However, if the service is marked as stopped in Pacemaker, inconsistency will occur and the condition will need to be treated in some way. I recommend not using it, as well as starting a virtual machine using virsh, which is similar to this command.

service clevervm forcestart

Starts the virtual machine (s) using Pacemaker. This is useful if the service has previously been marked as stopped in Pacemaker.

service clevervm stop

Stops (hibernates) the virtual machine (s). However, if the service is marked as running in Pacemaker, Pacemaker will restart it. I recommend not using it, as well as hibernating the virtual machine using virsh, which is similar to this command.

service clevervm forcestop

Stops the virtual machine (s) using Pacemaker and marks the service as stopped.

service clevervm cleanup

Clears service status - clears any failure records, allowing the service to restart if the number of allowed failures on a given node has been reached.

service clevervm migrate

Forces Pacemaker to migrate the primary DRBD repository (and thus all dependent services) away from this node (ie, to the opposite node). This node will be marked as unfit to service. To remove this restriction, you must call the unmigrate command after migration.

service clevervm unmigrate

Removes the "unfit service" flag from this node. This does not mean automatically moving services back, as there is a certain inertia (stickiness), preventing services from fluctuating.

Split-brain

DRBD split-brain recovery: http://www.drbd.org/users-guide/s-resolve-split-brain.html