Clever cluster

From PlcWiki

| Line 23: | Line 23: | ||

At the same time it tests external connectivity on all nodes using pingd (sends ICMP packets to gateway) and automatically promotes to primary role a node that has not lost the connectivity. | At the same time it tests external connectivity on all nodes using pingd (sends ICMP packets to gateway) and automatically promotes to primary role a node that has not lost the connectivity. | ||

| - | + | As a result of defined dependencies, the virtualized server gets moved as well as the DRBD storage. The migration is done by hibernating on the original node first (in case of controlled migration) and by resuming on the target node then. | |

| - | + | The whole operation takes about 2 minutes or less, even e.g. an open SSH session survives this operation. | |

| - | + | Migration can be initiated manually: with a command or with a special application. | |

| - | + | Another resource, that is automatically moved together with the primary DRBD storage is a special IP address denoting the active node. | |

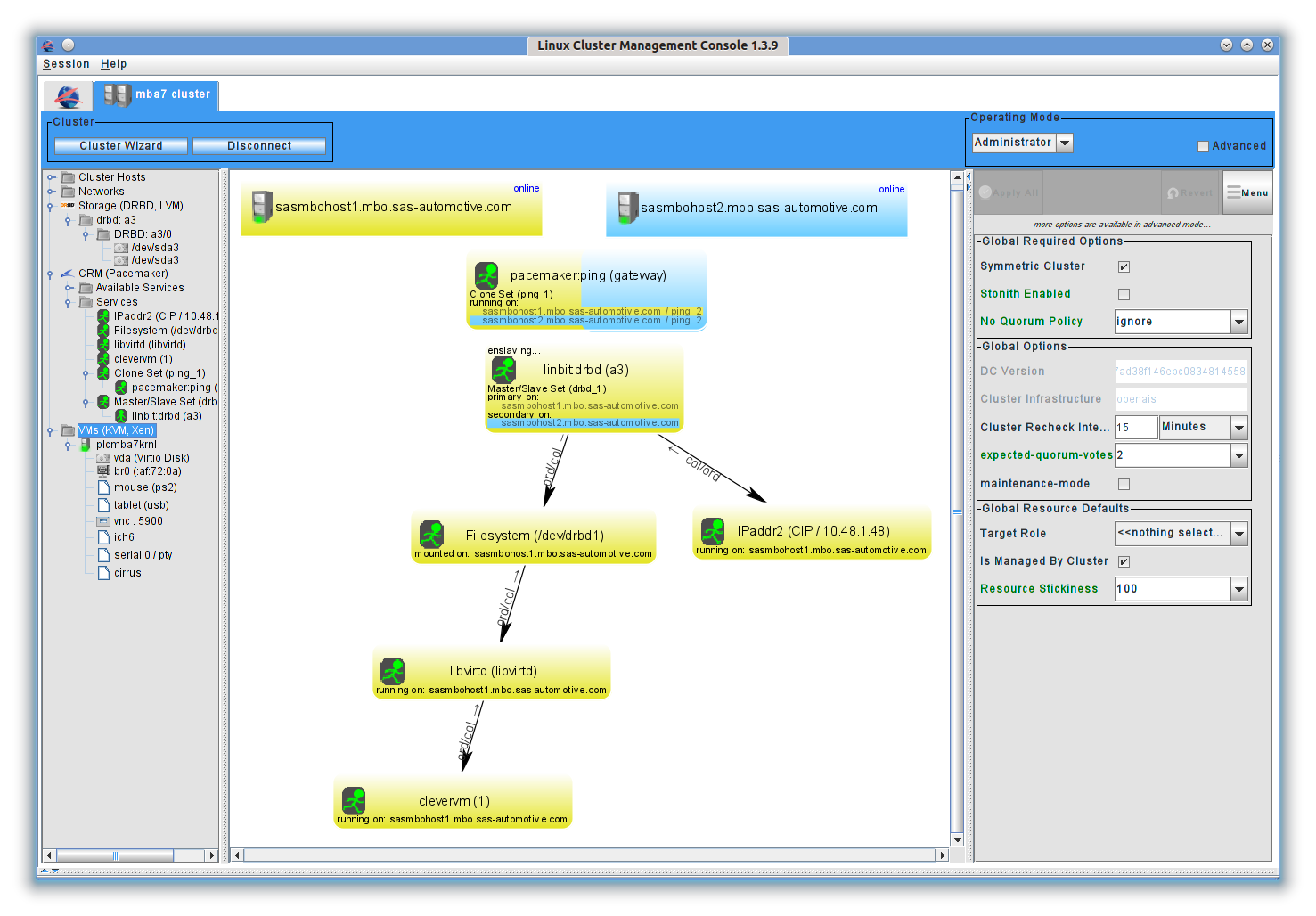

| - | + | It is recommended to install Linux Cluster Management Console for convenient cluster management: | |

http://sourceforge.net/projects/lcmc/files/ | http://sourceforge.net/projects/lcmc/files/ | ||

[[File:lcmc.png]] | [[File:lcmc.png]] | ||

| + | |||

Revision as of 10:59, 21 December 2012

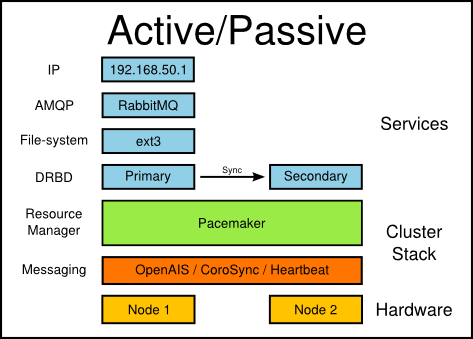

Clever Cluster is HA (high availability) cluster solution that ensures that a critical Clever server keeps running when a hardware failure occurs. The Clever server is a virtual machine that is able to be hosted on several cluster nodes. To achieve that goal, the virtual drive of the Clever server has to be stored on a shared resource.

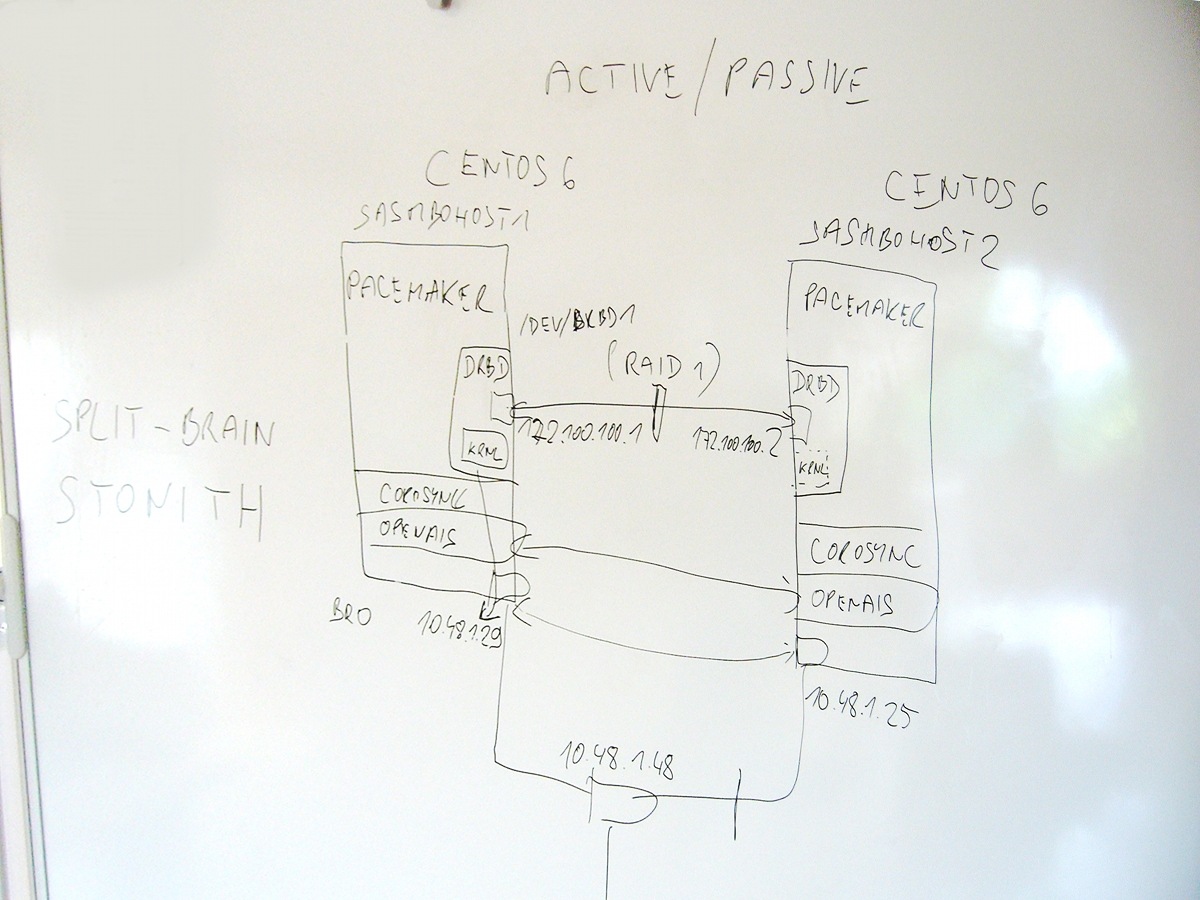

We utilize a technology called DRBD (Distributed Replicated Block Device) for that purpose. DRBD can be seen as a RAID1 over network. Only one node can be DRBD primary at a time - the other ones are secondary DRBD nodes. The DRBD block device can be mounted only in primary role. In the secondary role, it just synchronizes it's state with the primary DRBD node. To make the synchronization reliable, it's necessary to interconnect the nodes with a direct network link and keep the connection working at all costs. Therefore we recommend bonding two or more dedicated NICs for this single purpose.

When a hardware failure occurs on an active (primary) node, any currently inactive node can be promoted to primary role, the shared storage can be mounted and the virtual machine can be started on such node without much hassle. The new primary node should contain all the changes written to DRBD storage before failure as any modifications are transferred to secondary nodes almost immediately.

Such fail-over procedure is simple but would require human intervention. We also need to make sure just and only one node acts as a primary at a time. To automatize the whole process, we need an enterprise-level cluster stack.

The cluster stack of choice in our case is Pacemaker+Corosync(OpenAIS implementation) - a solution preferred by both Red Hat and Novell.

More about Pacemaker here: http://www.clusterlabs.org and here: http://www.slideshare.net/DanFrincu/pacemaker-drbd .

Pacemaker's job is to keep replicated DRBD storage mounted on one and only one active node and, as a dependency, to move the virtualized Clever server to such node.

At the same time it tests external connectivity on all nodes using pingd (sends ICMP packets to gateway) and automatically promotes to primary role a node that has not lost the connectivity.

As a result of defined dependencies, the virtualized server gets moved as well as the DRBD storage. The migration is done by hibernating on the original node first (in case of controlled migration) and by resuming on the target node then. The whole operation takes about 2 minutes or less, even e.g. an open SSH session survives this operation. Migration can be initiated manually: with a command or with a special application.

Another resource, that is automatically moved together with the primary DRBD storage is a special IP address denoting the active node.

It is recommended to install Linux Cluster Management Console for convenient cluster management: http://sourceforge.net/projects/lcmc/files/

Z konzole je možné cluster ovládat pomocí LSB služby clevervm, kterou jsem k tomuto účelu vytvořil (až na výjimky nezáleží na jakém uzlu se příkazy zadávají):

service clevervm status

Zobrazí podrobný stav cluster, příklad:

============

Last updated: Thu May 31 12:42:05 2012

Last change: Thu May 31 12:33:14 2012 via cibadmin on sasmbohost1.mbo.sas-automotive.com

Stack: openais

Current DC: sasmbohost1.mbo.sas-automotive.com - partition with quorum

Version: 1.1.6-3.el6-a02c0f19a00c1eb2527ad38f146ebc0834814558

2 Nodes configured, 2 expected votes

8 Resources configured.

============

Node sasmbohost2.mbo.sas-automotive.com: online

res_drbd_1:1 (ocf::linbit:drbd) Master

res_Filesystem_1 (ocf::heartbeat:Filesystem) Started

res_clevervm_1 (lsb:clevervm) Started

res_libvirtd_libvirtd (lsb:libvirtd) Started

res_ping_gateway:1 (ocf::pacemaker:ping) Started

res_IPaddr2_CIP (ocf::heartbeat:IPaddr2) Started

Node sasmbohost1.mbo.sas-automotive.com: online

res_ping_gateway:0 (ocf::pacemaker:ping) Started

res_drbd_1:0 (ocf::linbit:drbd) Slave

Inactive resources:

Migration summary:

* Node sasmbohost2.mbo.sas-automotive.com:

* Node sasmbohost1.mbo.sas-automotive.com:

====== DRBD storage =======

1:a3/0 Connected Primary/Secondary UpToDate/UpToDate A r----- /opt ext4 253G 107G 134G 45%

==== Virtual machines =====

---------------------------

Name: plcmba7krnl

State: running

Managed save: no

---------------------------

service clevervm start

Nastartuje virtuální stroj(e). Pokud je ovšem v Pacemakeru služba označena jako zastavená, dojde k nekonsistenci a bude nutné stav nějak ošetřit. Doporučuji nepoužívat, stejně jako startování virtuálního stroje pomocí virsh, jehož je tento příkaz obdobou.

service clevervm forcestart

Nastartuje virtuální stroj(e) pomocí Pacemakeru. To se hodí se, pokud byla předtím služba označena v Pacemakeru jako zastavená.

service clevervm stop

Zastaví (hibernuje) virtuální stroj(e). Pokud je ovšem v Pacemakeru služba označena jako spuštěná, Pacemaker ji znovu nastartuje. Doporučuji nepoužívat, stejně jako hibernování virtuálního stroje pomocí virsh, jehož je tento příkaz obdobou.

service clevervm forcestop

Zastaví virtuální stroj(e) pomocí Pacemakeru a označí službu jako zastavenou.

service clevervm cleanup

Vyčistí stav služby - smaže případné záznamy o selháních, čímž umožní službu znovu nastartovat, pokud byl dosažen počet povolených selhání na daném uzlu.

service clevervm migrate

Donutí Pacemaker, aby migroval primární DRBD úložiště (a tím i všechny závislé služby) pryč z tohoto uzlu (tj. na protější uzel). Tento uzel bude označen jako nezpůsobilý k provozu služeb. Pro odejmutí tohoto omezení je nutné zavolat po migraci příkaz unmigrate.

service clevervm unmigrate

Odebere od tohoto uzlu příznak "nezpůsobilý k provozu služeb". Neznamená to automatický přesun služeb zpět, jelikož funguje určitá setrvačnost (stickiness), zabraňující službám fluktuovat.

Split-brain

DRBD split-brain recovery: http://www.drbd.org/users-guide/s-resolve-split-brain.html